I stand as a manager where smart colleagues in a slowing industry want to know how they can do well while doing good, amidst the techlash. I am also a few degrees of separation from many important decision-makers in the tech industry. From my Stanford class (2007), that’s not hard to do — an Instagram founder and the Open AI CEO both roamed my dorm hallways. Nice, thoughtful people, creating what some now consider weapons of mass destruction.

I see constant battles of competing frames, all with elements of truth. ChatGPT the assistant vs. ChatGPT the job thief. Downsizing to sustain a company versus downsizing to boost investor profits. As I look around at tech industry leaders, and see their lofty visions and decisions met with backlash and criticism, I’m left wondering what good stewardship looks like. It is definitely not the summation of responding to all individual pushes and pulls; that is a recipe for wholesale discontent.

And us humans, with all our complexity, can hold competing ideas in our head at the same time. We might recite one belief on a Tuesday and a clashing belief on a Thursday, depending on mood and context. So is there any way to get it “right” — get to holistic “right” answers that will displease many, while also treating everyone “right” in the process? Put another way, can empathy scale at the same time as organizations and systems do?

Meeting the needs of many

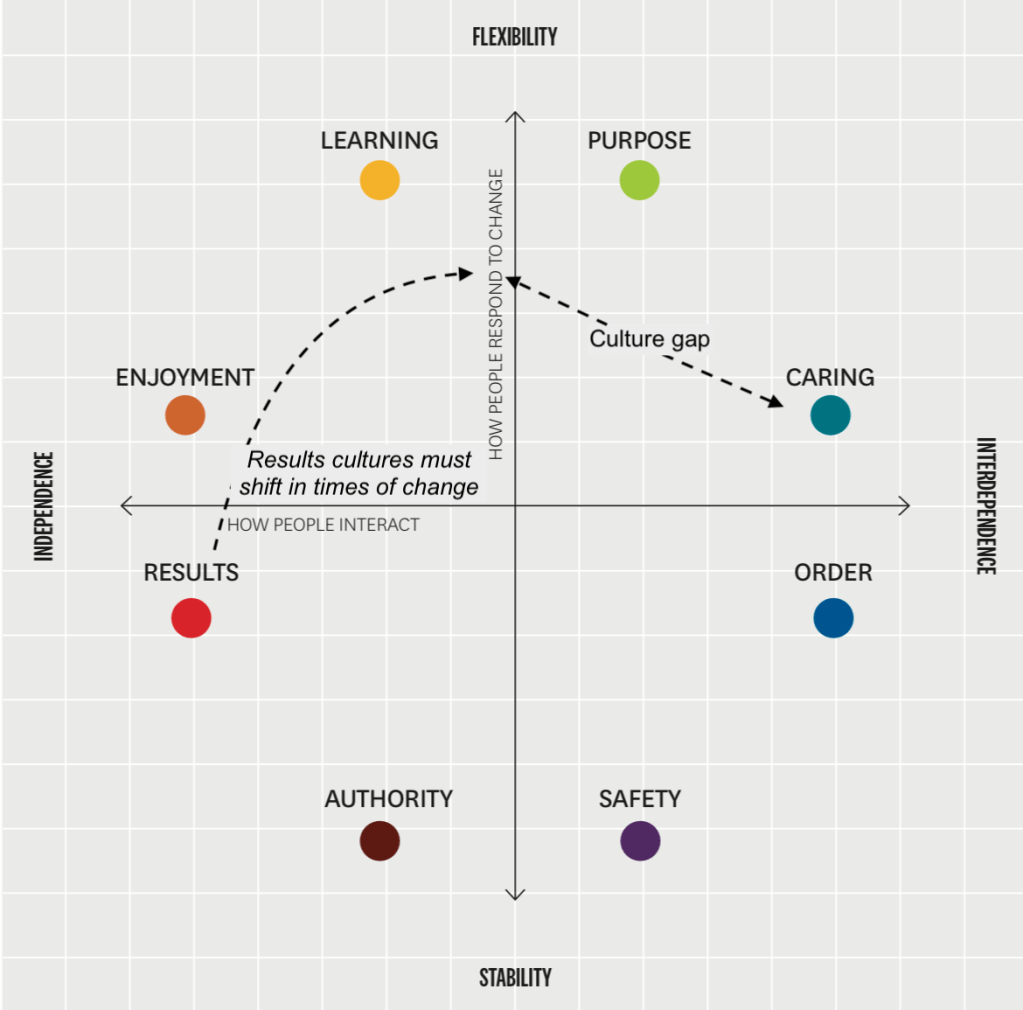

As I considered how scaled decision-making can balance logic and empathy, I reflected on a model of corporate culture developed at Harvard Business School and Spencer Stuart. It describes eight types of business cultures. Results cultures, which value achievement and winning, are in conflict with Caring cultures, which focus on relationship and trust. This seems a close proxy to my logic + empathy values and left me wondering: what is my ideal culture, and can it even exist? Is there a way to minimize or manage the Results/Caring “culture gap” (i.e., the distance between two conflicting cultures)? Can I get to the best answer for everyone, even when there is implied tension between optimizing for outcomes and optimizing for people?

Below I explore the question of empathy at scale, drawing on insights from philosophy, psychology, and technology. The TL;DR is 1) it depends on what you mean by empathy; 2) by commonly used philosopher definitions, empathy is not scalable, but 3) perhaps that’s for the best.

The debate and definitions

It turns out social psychologists and philosophers have already sparred on this question for a while. Professor Paul Bloom, psychologist and author of Against Empathy: The Case for Rational Compassion, framed the question as, “What kind of intellectual and sentimental attitudes should we have towards people?” He opens his argument with an important distinction: empathy is the ability to understand and share the feelings of another person — not to be confused with compassion, the desire to alleviate their suffering. Buddhist monk Matthieu Ricard similarly flags that empathy caries a sentimentality, whereas compassion allows for some distanced. (I guess we know how Matthieu would answer the trolley problem.)

But does emotional distance serve the greater good or just ourselves, protecting us from the emotional strain? There’s evidence on both sides. Professor Paul Slovic compared how much people cared about one suffering person versus that same person in a group and found that people cared less about the original person as the group grew. Our brains seem to be managing our capacity, so that we aren’t completely drained by the sheer volume of people to empathize with. Our psychological immune systems seem to prevent us from caring too deeply about each individual in a large group.

But perhaps this brain protective mechanism is, in fact, for the greater good. Bloom argues that limiting empathy can actually protect more vulnerable populations, because empathy is not immune to bias. We tend to empathize more with people who are similar to us, and less with people who are different. Imagine how this could shape decisions that affect a large group of people — problematic to say the least.

So, it seems our greatest moral teachers are warning us away from empathy; its short-sighted nature can misdirects us to focus on the suffering of one rather than the many. It’s why we care more about a mass shooting than we care about the other 99.9% of other gun homicides. When it comes to designing rules and legislation, we want something better than empathy. As philosopher Sam Harris puts it, “we want laws that are wiser than we are; a system that corrects for our suite of moral illusions or biases that lead us to misallocate real resources.”

The challenge is, good rules are very, very hard to make. So how do we take on this challenge at scale? This is the core question for organizations, as well as for the burgeoning AI industry.

Empathy in organizations and Artificial Intelligence

In the end, I do think logic and empathy can be married; the Results/Caring “culture gap” can be bridged by leaning into cultural moments of Purpose or Learning. For example, if you are pursuing a business reorganization, while it may be the logical move, the transition will be most successful if you include a Learning approach, testing elements of the reorg, and reaffirm shared Purpose — both of which can help demonstrate Caring by valuing feedback and fostering solidarity. This flexible approach could also enable bringing as many sub-cultures along as possible as well.

While operationalizing empathy in a practical, scaled way is a constrained process in organizations, the question becomes very different when we start outsourcing decisions to Artificial intelligence (AI) systems. AI is clearly capable of overcoming some human constraints. But is empathy one of them? Becoming emotionally drained is a unique human constraint; we see this in personalized learning, where AI tutors express infinite patience with pupils. AI could be trained to understand and respond to a wide range of emotions; perhaps AI can learn to scale beyond the personal assistant use-case to organizational assistance, and digest large volumes of feedback in an empathetic but balanced way.

The main dampener to this vision is that AI systems have not overcome every human limitation; they can still encode bias. And worse than humans, they generally lack emotional intelligence and can be easily manipulated. So at this point, it would be extremely risky with unquantifiable downside to trust decisions that require empathy or compassion to AI. As many an edtech expert has postulated, we need humans in the loop for all AI systems to evaluate their decisions and recommendations. The Partnership on Responsible AI recommends integrating inclusive participation across all stages of a development lifecycle (vs. participation only at the end, the more common practice).

Does empathy scale?

“I’ve learned that you can have it all, but not all at the same time.”

– Michelle Obama

FLOTUS’ words have stayed with me since I first read them — she warned that Millennials and younger generations often wants everything all at once. While I want both logic and empathy at all times, I accept that their tension will sometimes force trade-offs.

There may not be a single “right” answer for all people, organizations and stakeholders, but we can manage a balance of logic and empathy by keeping humans in the loop, especially in the tech industry. Identifying integration points ahead of time and what targeted input you need can improve both organizational and AI decision-making.